ROS - ZED Node

To start a ZED ROS node you can use the following commands in a shell console:

- ZED:

roslaunch zed_wrapper zed.launch

- ZED Mini:

roslaunch zed_wrapper zedm.launch

- ZED 2:

roslaunch zed_wrapper zed2.launch

- ZED 2i:

roslaunch zed_wrapper zed2i.launch

Published Topics #

The ZED node publishes data on the following topics:

Left camera

rgb/image_rect_color: Color rectified image (left RGB image by default)rgb/image_rect_gray: Grayscale rectified image (left RGB image by default)rgb_raw/image_raw_color: Color unrectified image (left RGB image by default)rgb_raw/image_raw_gray: Grayscale unrectified image (left RGB image by default)rgb/camera_info: Color camera calibration datargb_raw/camera_info: Color unrectified camera calibration dataleft/image_rect_color: Left camera color rectified imageleft/image_rect_gray: Left camera grayscale rectified imageleft_raw/image_raw_color: Left camera color unrectified imageleft_raw/image_raw_gray: Left camera grayscale unrectified imageleft/camera_info: Left camera calibration dataleft_raw/camera_info: Left unrectified camera calibration data

Right camera

right/image_rect_color: Color rectified right imageright_raw/image_raw_color: Color unrectified right imageright/image_rect_gray: Grayscale rectified right imageright_raw/image_raw_gray: Grayscale unrectified right imageright/camera_info: Right camera calibration dataright_raw/camera_info: Right unrectified camera calibration data

Stereo pair

stereo/image_rect_color: stereo rectified pair images side-by-sidestereo_raw/image_raw_color: stereo unrectified pair images side-by-side

📌 Note: to retrieve the camera parameters you can subscribe to the topics

left/camera_info,right/camera_info,left_raw/camera_infoandright_raw/camera_infoDepth and point cloud

depth/depth_registered: Depth map image registered on the left image (32-bit float in meters by default)depth/camera_info: Depth camera calibration datapoint_cloud/cloud_registered: Registered color point cloudconfidence/confidence_map: Confidence image (floating point values to be used in your own algorithms)disparity/disparity_image: Disparity image

Tracking

odom: Absolute 3D position and orientation relative to the Odometry frame (pure visual odometry for ZED, visual-inertial for ZED Mini and ZED 2)pose: Absolute 3D position and orientation relative to the Map frame (Sensor Fusion algorithm + SLAM + Loop closure)pose_with_covariance: Camera pose referred to Map frame with covariancepath_odom: Sequence of camera odometry poses in Map framepath_map: Sequence of camera poses in Map frame

Mapping

mapping/fused_cloud: Fused color point cloud

📌 Note: published only if mapping is enabled, see

mapping/mapping_enabledparameterSensor Data

imu/data: Accelerometer, gyroscope, and orientation data in Earth frame [only ZED Mini and ZED 2]imu/data_raw: Accelerometer and gyroscope data in Earth frame [only ZED Mini and ZED 2]imu/mag: Calibrated magnetometer data [only ZED 2]atm_press: Atmospheric pressure data [only ZED 2]temperature/imu: Temperature of the IMU sensor [only ZED 2]temperature/left: Temperature of the left camera sensor [only ZED 2]temperature/right: Temperature of the right camera sensor [only ZED 2]left_cam_imu_transform: Transform from the left camera sensor to IMU sensor position

Object Detection [only ZED 2]

objects: array of the detected/tracked objects for each camera frame [only ZED 2]object_markers: array of markers of the detected/tracked objects to be rendered in Rviz [only ZED 2]

Diagnostic

/diagnostics: ROS diagnostic message for ZED cameras

ZED parameters #

You can specify the parameters to be used by the ZED node modifying the values in the files

params/common.yaml: common parameters to all camera modelsparams/zed.yaml: parameters for the ZED cameraparams/zedm.yaml: parameters for the ZED Mini cameraparams/zed2.yaml: parameters for the ZED 2 cameraparams/zed2i.yaml: parameters for the ZED 2i camera

General parameters #

Parameters with prefix general

| Parameter | Description | Value |

|---|---|---|

| camera_name | A custom name for the ZED camera. Used as namespace and prefix for camera TF frames | string, default=zed |

| camera_model | Type of Stereolabs camera | zed: ZED, zedm: ZED Mini, zed2: ZED 2, zed2i: ZED 2i |

| camera_flip | Flip the camera data if it is mounted upsidedown | true, false |

| zed_id | Select a ZED camera by its ID. Useful when multiple cameras are connected. ID is ignored if an SVO path is specified | int, default 0 |

| serial_number | Select a ZED camera by its serial number | int, default 0 |

| resolution | Set ZED camera resolution | 0: HD2K, 1: HD1080, 2: HD720, 3: VGA |

| grab_frame_rate | Set ZED camera grabbing framerate | int |

| gpu_id | Select a GPU device for depth computation | int, default -1 (best device found) |

| base_frame | Frame_id of the frame that indicates the reference base of the robot | string, default=base_link |

| verbose | Enable/disable the verbosity of the SDK | true, false |

| svo_compression | Set SVO compression mode | 0: LOSSLESS (PNG/ZSTD), 1: H264 (AVCHD) ,2: H265 (HEVC) |

| self_calib | Enable/disable self calibration at starting | true, false |

Video parameters #

Parameters with prefix video

| Parameter | Description | Value |

|---|---|---|

| img_downsample_factor | Resample factor for images [0.01,1.0]. The SDK works with native image sizes, but publishes rescaled image. | double, default=1.0 |

| extrinsic_in_camera_frame | If false extrinsic parameter in camera_info will use ROS native frame (X FORWARD, Z UP) instead of the camera frame (Z FORWARD, Y DOWN) [true use old behavior as for version < v3.1] | true, false |

Depth parameters #

Parameters with prefix depth

| Parameter | Description | Value |

|---|---|---|

| quality | Select depth map quality | ‘0’: NONE, ‘1’: PERFORMANCE, ‘2’: QUALITY, ‘3’: ULTRA |

| sensing_mode | Select depth sensing mode (change only for VR/AR applications) | 0: STANDARD, 1: FILL |

| depth_stabilization | Enable depth stabilization. Stabilizing the depth requires an additional computation load as it enables tracking | 0: disabled, 1: enabled |

| openni_depth_mode | Convert 32bit depth in meters to 16bit in millimeters | 0: 32bit float meters, 1: 16bit uchar millimeters |

| depth_downsample_factor | Resample factor for depth data matrices [0.01,1.0]. The SDK works with native data sizes, but publishes rescaled matrices (depth map, point cloud, …) | double, default=1.0 |

| min_depth | Minimum value allowed for depth measures | Min: 0.3 (ZED) or 0.1 (ZED Mini), Max: 3.0 - Note: reducing this value will require more computational power and GPU memory. In cases of limited compute power, increasing this value can provide better performance |

| max_depth | Maximum value allowed for depth measures | Min: 1.0, Max: 30.0 - Values beyond this limit will be reported as TOO_FAR |

Position parameters #

Parameters with prefix pos_tracking

| Parameter | Description | Value |

|---|---|---|

| pos_tracking_enabled | Enable/disable positional tracking from start | true, false |

| publish_tf | Enable/disable publish TF frames | true, false |

| publish_map_tf | Enable/disable publish map TF frame | true, false |

| map_frame | Frame_id of the pose message | string, default=map |

| odometry_frame | Frame_id of the odom message | string, default=odom |

| area_memory_db_path | Path of the database file for loop closure and relocalization that contains learnt visual information about the environment | string, default=`` |

| save_area_memory_db_on_exit | Save the “known visual features” map when the node is correctly closed to the path indicated by area_memory_db_path | true, false |

| area_memory | Enable Loop Closing | true, false |

| floor_alignment | Indicates if the floor must be used as origin for height measures | true, false |

| initial_base_pose | Initial reference pose | vector, default=[0.0,0.0,0.0, 0.0,0.0,0.0] -> [X, Y, Z, R, P, Y] |

| init_odom_with_first_valid_pose | Indicates if the odometry must be initialized with the first valid pose received by the tracking algorithm | true, false |

| path_pub_rate | Frequency (Hz) of publishing of the trajectory messages | float, default=2.0 |

| path_max_count | Maximum number of poses kept in the pose arrays (-1 for infinite) | int, default=-1 |

| two_d_mode | Force navigation on a plane. If true the Z value will be fixed to “fixed_z_value”, roll and pitch to zero | true, false |

| fixed_z_value: | Value to be used for Z coordinate if two_d_mode is true | double, default: 0.0 |

Mapping parameters #

Parameters with prefix mapping

| Parameter | Description | Value |

|---|---|---|

| mapping_enabled | Enable/disable the mapping module | true, false |

| resolution | Resolution of the fused point cloud [0.01, 0.2] | double, default=0.1 |

| max_mapping_range | Maximum depth range while mapping in meters (-1 for automatic calculation) [2.0, 20.0] | double, default=-1 |

| fused_pointcloud_freq | Publishing frequency (Hz) of the 3D map as fused point cloud | double, default=1.0 |

Sensors parameters (only ZED Mini, ZED 2, and ZED 2i) #

Parameters with prefix sensors

| Parameter | Description | Value |

|---|---|---|

| sensors_timestamp_sync | Synchronize Sensors message timestamp with latest received frame | true, false |

| publish_imu_tf | Publish IMU -> <cam_name>_left_camera_frame TF | true, false |

Object Detection parameters (not available for ZED) #

Parameters with prefix object_detection

| Parameter | Description | Value |

|---|---|---|

| od_enabled | Enable/disable the Object Detection module when the node starts | true, false |

| model | Object Detection module to be used | string, ‘MULTI_CLASS_BOX_FAST’, ‘MULTI_CLASS_BOX_MEDIUM’, ‘MULTI_CLASS_BOX_ACCURATE’, ‘PERSON_HEAD_BOX_FAST’, ‘PERSON_HEAD_BOX_ACCURATE’ |

| confidence_threshold | Minimum value of the detection confidence of an object | int [0,100] |

| max_range: | Maximum detection range | double, default: 15. |

| object_tracking_enabled | Enable/disable the tracking of the detected objects | true, false |

| body_fitting: | Enable/disable body fitting for ‘HUMAN_BODY’ models | true, false |

| mc_people: | Enable/disable the detection of persons for ‘MULTI_CLASS_BOX’ models | true, false |

| mc_vehicle: | Enable/disable the detection of vehicles for ‘MULTI_CLASS_BOX’ models | true, false |

| mc_bag: | Enable/disable the detection of bags for ‘MULTI_CLASS_BOX’ models | true, false |

| mc_animal: | Enable/disable the detection of animals for ‘MULTI_CLASS_BOX’ models | true, false |

| mc_electronics: | Enable/disable the detection of electronic devices for ‘MULTI_CLASS_BOX’ models | true, false |

| mc_fruit_vegetable: | Enable/disable the detection of fruits and vegetables for ‘MULTI_CLASS_BOX’ models | true, false |

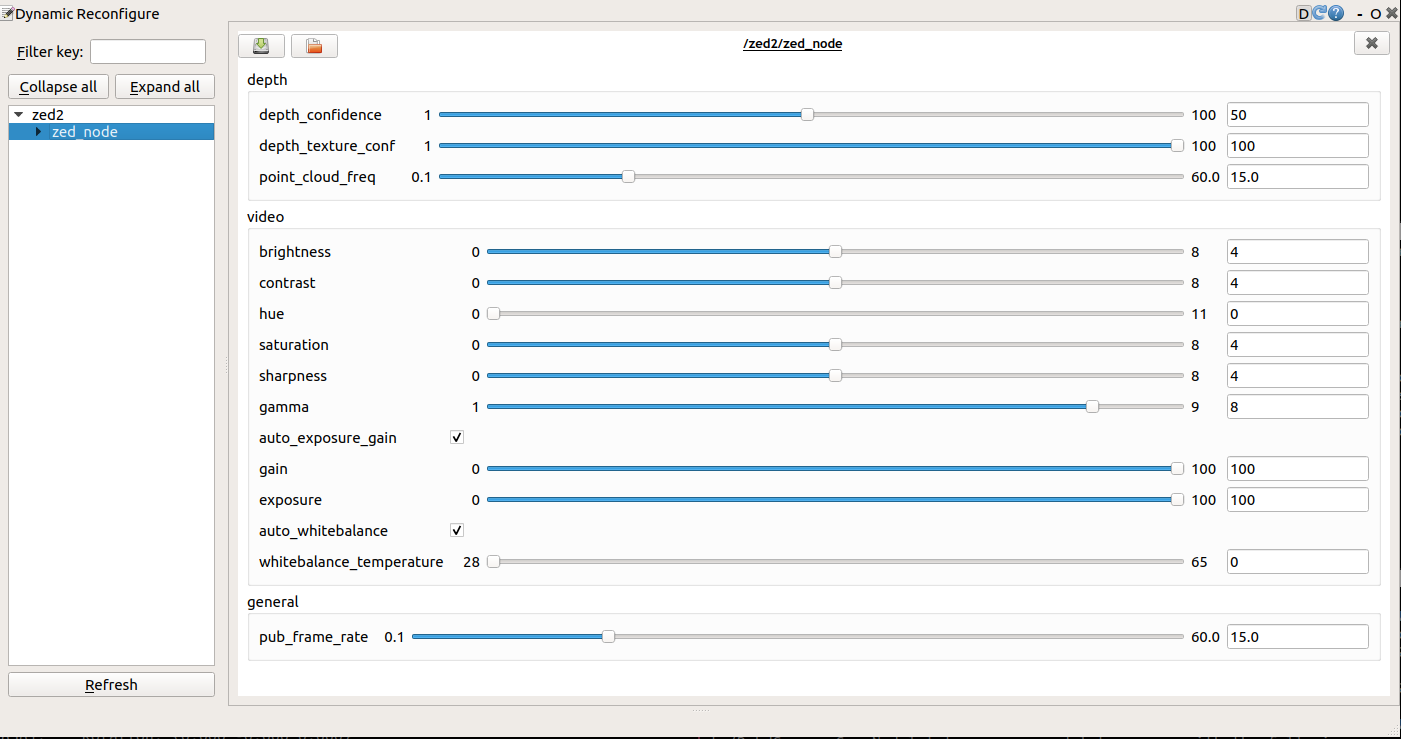

Dynamic parameters #

The ZED node lets you reconfigure these parameters dynamically:

| Parameter | Description | Value |

|---|---|---|

| pub_frame_rate | Frequency of the publishing of Video and Depth images (equal or minor to grab_frame_rate value) | float [0.1,100.0] |

| depth_confidence | Threshold to reject depth values based on their confidence. Each depth pixel has a corresponding confidence. A lower value means more confidence and precision (but less density). An upper value reduces filtering (more density, less certainty). A value of 100 will allow values from 0 to 100. (no filtering). A value of 90 will allow values from 10 to 100. (filtering lowest confidence values). A value of 30 will allow values from 70 to 100. (keeping highest confidence values and lowering the density of the depth map). The value should be in [1,100]. By default, the confidence threshold is set at 100, meaning that no depth pixel will be rejected. | int [0,100] |

| depth_texture_conf | Threshold to reject depth values based on their textureness confidence. A lower value means more confidence and precision (but less density). An upper value reduces filtering (more density, less certainty). The value should be in [1,100]. By default, the confidence threshold is set at 100, meaning that no depth pixel will be rejected. | int [0,100] |

| point_cloud_freq | Frequency of the pointcloud publishing (equal or minor to frame_rate value) | float [0.1,100.0] |

| brightness | Defines the brightness control | int [0,8] |

| contrast | Defines the contrast control | int [0,8] |

| hue | Defines the hue control | int [0,11] |

| saturation | Defines the saturation control | int [0,8] |

| sharpness | Defines the sharpness control | int [0,8] |

| gamma | Defines the gamma control | int [1,9] |

| auto_exposure_gain | Defines if the Gain and Exposure are in automatic mode or not | true, false |

| gain | Defines the gain control [only if auto_exposure_gain is false] | int [0,100] |

| exposure | Defines the exposure control [only if auto_exposure_gain is false] | int [0,100] |

| auto_whitebalance | Defines if the White balance is in automatic mode or not | true, false |

| whitebalance_temperature | Defines the color temperature value (x100) | int [42,65] |

To modify a dynamic parameter, you can use the GUI provided by the rqt stack:

rosrun rqt_reconfigure rqt_reconfigure

Transform frames #

The ZED ROS wrapper broadcasts multiple coordinate frames that each provide information about the camera’s position and orientation. If needed, the reference frames can be changed in the launch file.

base_frameis the current position and orientation of the reference base of the robot<camera_name>_camera_centeris the current position and orientation of ZED, determined by visual odometry and the tracking module<camera_name>_right_camerais the position and orientation of the ZED’s right camera<camera_name>_right_camera_opticalis the position and orientation of the ZED’s right camera optical frame<camera_name>_left_camerais the position and orientation of the ZED’s left camera<camera_name>_left_camera_opticalis the position and orientation of the ZED’s left camera optical frame<camera_name>_imu_linkis the origin of the inertial data frame (ZED Mini and ZED 2 only)<camera_name>_mag_linkis the origin of the magnetometer frame (ZED 2 only)<camera_name>_baro_linkis the origin of the barometer frame (ZED 2 only)<camera_name>_temp_left_linkis the origin of the left temperature frame (ZED 2 only)<camera_name>_temp_right_linkis the origin of the right temperature frame (ZED 2 only)

For RVIZ compatibility, the root frame map_frame is called map.

The TF tree generated by the zed_wrapper reflects the standard described in REP105.

The odometry frame is updated using only the “visual odometry” information.

The map frame is updated using the Tracking algorithm provided by the Stereolabs SDK, fusing the inertial information from the IMU sensor if using a ZED Mini camera.

map_frame (`map`)

└─odometry_frame (`odom`)

└─base_frame (`base_link`)

└─camera_frame (`<camera_name>_camera_center`)

| └─left_camera_frame (`<camera_name>_left_camera_frame`)

| | └─left_camera_optical (`<camera_name>_left_camera_optical`)

| | └─left_temperature:frame (`<camera_name>_temp_left_link`)

| └─right_camera_frame (`<camera_name>_right_camera_frame`) (*only ZED 2 and ZED 2i*)

| └─right_camera_optical (`<camera_name>_right_camera_optical`)

| └─left_temperature:frame (`<camera_name>_temp_left_link`)

└─imu_frame (`<camera_name>_imu_link`) (*only ZED Mini and ZED 2 and ZED 2i*)

└─magnetometer_frame (`<camera_name>_mag_link`) (*only ZED 2 and ZED 2i*)

└─barometer_frame (`<camera_name>_baro_link`) (*only ZED 2 and ZED 2i*)

ZED Mini #

The ZED Mini provides the same information as the ZED, plus the inertial data from the IMU sensor. The IMU data are used internally to generate the pose in the Map frame with the Tracking sensor fusion algorithm.

📌 Note: The initial pose in Odometry frame can be set to the first pose received by the Tracking module by setting the parameter

init_odom_with_first_valid_posetotrue.

ZED 2 / ZED 2i #

The ZED 2 and ZED 2i provide the same information as the ZED and the ZED Mini, plus the data from a new set of sensors. The ZED 2 and ZED 2i provide magnetometer data to get better and absolute information about the YAW angle, atmospheric pressure information to be used to estimate the height of the camera with respect to a reference point and temperature information to check the camera health in a complex robotic system.

Services #

The ZED node provides the following services:

reset_tracking: Restarts the Tracking module setting the initial pose to the value available in the param server.reset_odometry: Resets the odometry values eliminating the drift due to the Visual Odometry algorithm, setting the newodometryvalue to the latest cameraposereceived from the tracking module.set_pose: Sets the current pose of the camera to the value passed as single parameters ->X, Y, Z[m],R, P, Y[rad].save_area_memory: Triggers the saving of the current area memory used for relocation, if area memory is enabled.set_led_status: Sets the status of the blue led ->True: LED ON,False: LED OFF.toggle_led: Toggles the status of the blue led, returning the new status.start_svo_recording: Starts recording an SVO file. If no filename is provided the defaultzed.svois used. If no path is provided with the filename the default recording folder is~/.ros/.stop_svo_recording: Stops an active SVO recording.start_remote_stream: Starts streaming over the network to allow processing of ZED data on a remote machine. See Remote streaming.stop_remote_stream: Stops streaming over the network.start_3d_mapping: Starts the Spatial Mapping processing. See Spatial Mapping.stop_3d_mapping: Stops the Spatial Mapping processing (works even with an automatic start from the configuration file).save_3d_map: Triggers the saving of the current 3D fused point cloud if the mapping module is enabled.enable_object_detection: Starts/Stop the Object Detection processing. See Object Detection

📌 Note: Currently the H26x SVO compression uses the resolution and the real FPS to calculate the bitrate. This feature can lead to some issues of quality when FPS is low. On NVIDIA® Jetson™ TX1 and NVIDIA® Jetson™ TX2, the FPS is quite low at the beginning of the grab loop, therefore it could be better to wait for some grab calls before calling

start_svo_recordingso that the camera FPS is stabilized at the requested value (15fps or more).

Remote streaming #

With SDK v2.8 has been introduced the capability to acquire ZED data and stream it on a remote machine over the network. This feature is useful when the ZED is connected to a machine with limited CUDA capabilities while a high-definition analysis is required. Starting the streaming without activating any other feature (i.e. depth processing, positional tracking, point cloud publishing, …) requires only the power for H264 or H265 compression.

To start streaming the service start_remote_stream must be called with the following parameters:

codec(def. 0): Defines the codec used for streaming (0: AVCHD [H264],1: HEVC [H265])📌 Note: If HEVC (H265) is used, make sure the receiving host is compatible with HEVC decoding (basically a Pascal NVIDIA® card). If not, prefer to use AVCHD (H264) since every compatible NVIDIA® card supports AVCHD decoding

port(def. 30000): Defines the PORT the data will be streamed on.📌 Note: port must be an even number. Any odd number will be rejected uint16 port=30000

bitrate(def. 2000): Defines the streaming BITRATE in Kbits/sgop_size(def. -1): Defines the GOP SIZE in frame unit.📌 Note: if value is set to -1, the gop size will match 2 seconds, depending on the camera fps. The gop size determines the maximum distance between IDR/I-frames. Very high GOP size will result in slightly more efficient compression, especially on static scene. But it can result in more latency if IDR/I-frame packet are lost during streaming. Maximum allowed value is 256 (frames)

adaptative_bitrate(def. False): Enable/Disable adaptive bitrate.📌 Note: Bitrate will be adjusted regarding the number of packet loss during streaming. If activated, bitrate can vary between [bitrate/4, bitrate]. Currently, the adaptive bitrate only works when “sending” device is a NVIDIA® jetson (X1, X2, Xavier, Nano)

Receive a remote stream #

To acquire the streaming on a remote machine start a ZED node with the following command:

roslaunch zed_wrapper zed.launch stream:=<sender_IP>:<port>

For example:

roslaunch zed_wrapper zed.launch stream:=192.168.1.127:30000

📌 Note: the stream contains only visual information. Using a ZED Mini camera the inertial topic will not be available if not subscribed using ROS standard methods.

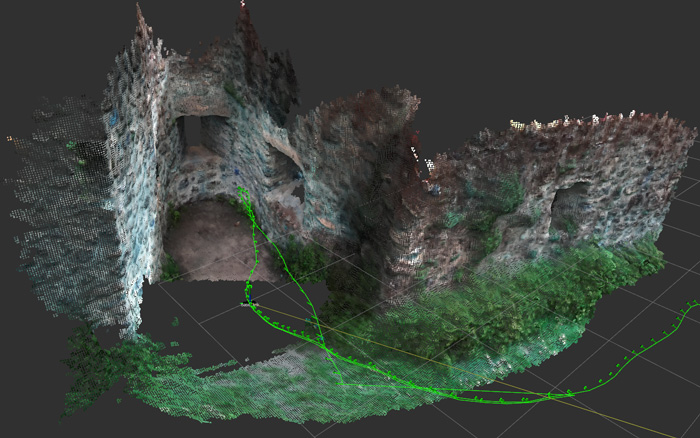

Spatial Mapping #

The ZED node provides a basilar mapping module publishing a 3D map of the environment as a 3D color point cloud (mapping/fused_cloud).

Spatial Mapping is disabled by default as it’s a heavy consuming process, it can be enabled setting to true the parameter mapping/mapping_enabled in the file params/common.yaml.

Spatial Mapping can be enabled manually at any time using the start_3d_mapping service.

To reduce the computational power requirements, use a value higher than 0.1 m for resolution_m and decrease the value of fused_pointcloud_freq to reduce the frequency of the point cloud topic publishing.

It is possible to save the generated 3D map as a point cloud in the OBJ, OBJ binary or PLY format, by calling the save_3d_map service.

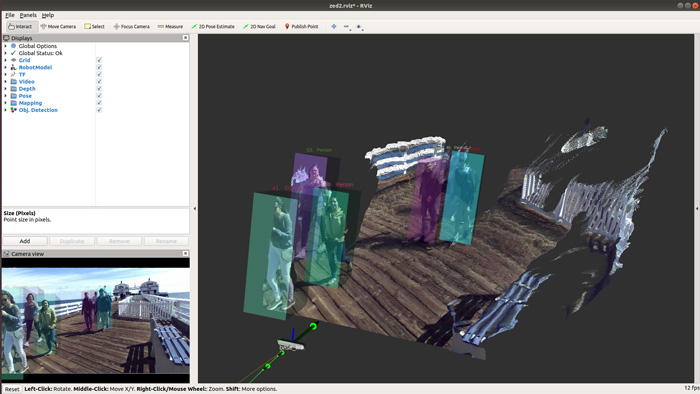

Object Detection #

The Object Detection module allows the detection and tracking of Objects.

Object detection is disabled by default as it’s a heavy-consuming process, it can be automatically enabled when the node starts setting to true the parameter object_detection/od_enabled in the file params/common.yaml.

If Object Detection is disabled it can be manually enabled at any time using the enable_object_detection service.

The list of the detected objects is published as a custom topic of type ObjectStamped, defined in the package zed_interfaces. For details about the topic fields please read the Object Detection full documentation.

Object Tracking #

Enabling Object Tracking allows getting information frame by frame about the status of each detected object. The same object is identified with the same ID in all the frames after its first detection and its position is estimated if an occlusion occurs.

Each detected object can get four different values about their Tracking Status:

- OFF - The tracking is not yet initialized, and the object ID is not usable

- OK - The object is tracked

- SEARCHING - The object couldn’t be detected in the image and is potentially occluded, the trajectory is estimated

- TERMINATE - This is the last searching state of the track, the track will be deleted in the next

retrieveObject

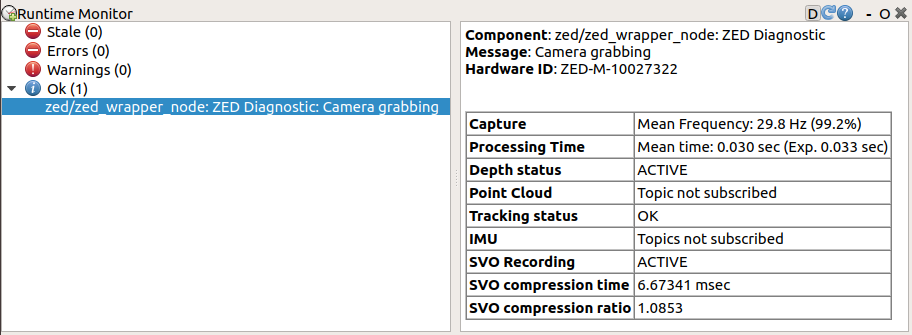

Diagnostic #

The ZED node publishes diagnostic information that can be used by the robotics system using a diagnostic_aggregator node.

Using the Runtime monitor plugin of rqt it is possible to get all the diagnostic information and check that the node is working as expected:

A brief explanation of each field:

Component: name of the diagnostic componentMessage: summary of the status of the ZED nodeHardwareID: Model of the ZED camera and its serial numberCapture: grabbing frequency (if video or depth data are subscribed) and the percentage with respect to the camera frame rateProcessing time: time in seconds spent to elaborate data and the time limit to achieve max frame ratePlaying SVO: (visible only if playing an SVO file) current frame position in the SVO file over the total frame countDepth status: indicates if the depth processing is performedPoint Cloud: point cloud publishing frequency (if there is at least a subscriber) and the percentage with respect to the camera frame rateFloor Detection: if the floor detection is enabled, indicates if the floor has been detected and the camera position correctly initializedTracking status: indicates the status of the positional tracking if enabledObject data processing: if Object Detection is enabled indicates the time required to process the data relative to detected objectsIMU: the publishing frequency of the IMU topics, if the camera is the ZED Mini and there is at least a subscriberLeft CMOS Temp.: (only ZED 2) the temperature of the CMOS of the left camera sensor [-273.15°C if not valid]Right CMOS Temp.: (only ZED 2) the temperature of the CMOS of the right camera sensor [-273.15°C if not valid]SVO Recording: indicates if the SVO recording is activeSVO Compression time: average time spent on frame compressingSVO Compression ratio: average frame compression ratio

Using multiple ZEDs #

It is possible to use multiple ZED cameras with ROS. Simply launch the node with the zed_multi_cam.launch file:

roslaunch zed_wrapper zed_multi_cam.launch

Assigning a GPU to a camera #

To improve performance, you can specify the gpu_id of the graphic card that will be used for the depth computation in the launch file. The default value (-1) will select the GPU with the highest number of CUDA cores. When using multiple ZEDs, you can assign each camera to a GPU to increase performance.

Limitations #

Performance #

This wrapper lets you quickly prototype applications and interface the ZED with other sensors and packages available in ROS. However, the ROS layer introduces significant latency and a performance hit. If performance is a major concern for your application, please consider using the ZED SDK library.

Multiple ZEDs #

The ZED camera uses the maximum bandwidth provided by USB 3.0 to output video. When using multiple ZEDs, you may need to reduce camera framerate and resolution to avoid corrupted frames (green or purple frames). You can also use multiple GPUs to load-balance computations and improve performance.